Propaganda Techniques American Mainstream uses to Brainwash Americans

Propaganda Techniques American Mainstream uses to Brainwash Americans

by Debbie Menon

I believe perhaps the running of the two articles on the same theme below as natural companions might alert or illuminate the hard core believers of Mainstream Media propaganda and/or so-called official stories, to what is being done to them on a daily basis, and on why people believe what they do and how hard facts belying fundamental beliefs usually seem to reinforce them in the gullible and thoughtless.

Dr. Cynthia Boaz deals with a common source, FOX News, and their application and employment of standard propaganda and “spin” techniques which we see every day… but which I am not sure yet that the general public in America, has probably become so familiar with because of its prolific and constant use, that they hardly or seldom notice.

There is a monolith of falsehood out there, and FOX News is but one of its foundation blocks. They must be chipped away if we are to topple the entire lie.

Ronald Reagan’s “Welfare Queen” anecdote in the second piece is an incredible example, it just reinforces what some of us have always known and perchance the non-believers might be persuaded and benefit from knowing.

14 Propaganda Techniques Fox “News” Uses to Brainwash Americans[1]

by Dr. Cynthia Boaz, Truthout

There is nothing more sacred to the maintenance of democracy than a free press. Access to comprehensive, accurate and quality information is essential to the manifestation of Socratic citizenship – the society characterized by a civically engaged, well-informed and socially invested populace. Thus, to the degree that access to quality information is willfully or unintentionally obstructed, democracy itself is degraded.

It is ironic that in the era of 24-hour cable news networks and “reality” programming, the news-to-fluff ratio and overall veracity of information has declined precipitously. Take the fact Americans now spend on average about 50 hours a week using various forms of media, while at the same time cultural literacy levels hover just above the gutter. Not only does Mainstream Media now tolerate gross misrepresentations of fact and history by public figures (highlighted most recently by Sarah Palin’s ludicrous depiction of Paul Revere’s ride), but many media actually legitimize these displays. Pause for a moment and ask yourself what it means that the world’s largest, most profitable and most popular news channel passes off as fact every whim, impulse and outrageously incompetent analysis of its so-called reporters. How did we get here? Take the enormous amount of misinformation that is taken for truth by Fox audiences: the belief that Saddam Hussein had weapons of mass destruction (WMD) and that he was in on 9/11, the belief that climate change isn’t real and/or man-made, the belief that Barack Obama is Muslim and wasn’t born in the United States, the insistence that all Arabs are Muslim and all Muslims are terrorists, the inexplicable perceptions that immigrants are both too lazy to work and are about to steal your job. All of these claims are demonstrably false, yet Fox News viewers will maintain their veracity with incredible zeal. Why? Is it simply that we have lost our respect for knowledge?

My curiosity about this question compelled me to sit down and document the most oft-used methods by which willful ignorance has been turned into dogma by Fox News and other propagandists disguised as media. The techniques I identify here also help to explain the simultaneously powerful identification the Fox media audience has with the network, as well as their ardent, reflexive defenses of it.

The good news is that the more conscious you are of these techniques, the less likely they are to work on you. The bad news is that those reading this article are probably the least in need in of it.

1.Panic Mongering: This goes one step beyond simple fear mongering. With panic mongering, there is never a break from the fear. The idea is to terrify and terrorize the audience during every waking moment. From Muslims to swine flu to recession to homosexuals to immigrants to the rapture itself, the belief over at Fox seems to be that if your fight-or-flight reflexes aren’t activated, you aren’t alive. This of course raises the question: why terrorize your own audience? Because it is the fastest way to bypasses the rational brain. In other words, when people are afraid, they don’t think rationally. And when they can’t think rationally, they’ll believe anything.

2.Character Assasination/Ad Hominem: Fox does not like to waste time debating the idea. Instead, they prefer a quicker route to dispensing with their opponents: go after the person’s credibility, motives, intelligence, character, or, if necessary, sanity. No category of character assasination is off the table and no offense is beneath them. Fox and like-minded media figures also use ad hominem attacks not just against individuals, but entire categories of people in an effort to discredit the ideas of every person who is seen to fall into that category, e.g. “liberals,” “hippies,” “progressives” etc. This form of argument – if it can be called that – leaves no room for genuine debate over ideas, so by definition, it is undemocratic. Not to mention just plain crass.

3. Projection/Flipping: This one is frustrating for the viewer who is trying to actually follow the argument. It involves taking whatever underhanded tactic you’re using and then accusing your opponent of doing it to you first. We see this frequently in the immigration discussion, where anti-racists are accused of racism, or in the climate change debate, where those who argue for human causes of the phenomenon are accused of not having science or facts on their side. It’s often called upon when the media host finds themselves on the ropes in the debate.

4. Rewriting History: This is another way of saying that propagandists make the facts fit their worldview. The Downing Street Memos on the Iraq war were a classic example of this on a massive scale, but it happens daily and over smaller issues as well. A recent case in point is Palin’s mangling of the Paul Revere ride, which Fox reporters have bent over backward to validate. Why lie about the historical facts, even when they can be demonstrated to be false? Well, because dogmatic minds actually find it easier to reject reality than to update their viewpoints. They will literally rewrite history if it serves their interests. And they’ll often speak with such authority that the casual viewer will be tempted to question what they knew as fact.

5. Scapegoating/Othering: This works best when people feel insecure or scared. It’s technically a form of both fear mongering and diversion, but it is so pervasive that it deserves its own category. The simple idea is that if you can find a group to blame for social or economic problems, you can then go on to a) justify violence/dehumanization of them, and b) subvert responsibility for any harm that may befall them as a result.

6. Conflating Violence With Power and Opposition to Violence With Weakness. This is more of what I’d call a “meta-frame” (a deeply held belief) than a media technique, but it is manifested in the ways news is reported constantly. For example, terms like “show of strength” are often used to describe acts of repression, such as those by the Iranian regime against the protesters in the summer of 2009. There are several concerning consequences of this form of conflation. First, it has the potential to make people feel falsely emboldened by shows of force – it can turn wars into sporting events. Secondly, especially in the context of American politics, displays of violence – whether manifested in war or debates about the Second Amendment – are seen as noble and (in an especially surreal irony) moral. Violence become synonymous with power, patriotism and piety.

7. Bullying: This is a favorite technique of several Fox commentators. That it continues to be employed demonstrates that it seems to have some efficacy. Bullying and yelling works best on people who come to the conversation with a lack of confidence, either in themselves or their grasp of the subject being discussed. The bully exploits this lack of confidence by berating the guest into submission or compliance. Often, less self-possessed people will feel shame and anxiety when being berated and the quickest way to end the immediate discomfort is to cede authority to the bully. The bully is then able to interpret that as a “win.”

8. Confusion: As with the preceding technique, this one works best on an audience that is less confident and self-possessed. The idea is to deliberately confuse the argument, but insist that the logic is airtight and imply that anyone who disagrees is either too dumb or too fanatical to follow along. Less independent minds will interpret the confusion technique as a form of sophisticated thinking, thereby giving the user’s claims veracity in the viewer’s mind.

9. Populism: This is especially popular in election years. The speakers identifies themselves as one of “the people” and the target of their ire as an enemy of the people. The opponent is always “elitist” or a “bureaucrat” or a “government insider” or some other category that is not the people. The idea is to make the opponent harder to relate to and harder to empathize with. It often goes hand in hand with scapegoating. A common logical fallacy with populism bias when used by the right is that accused “elitists” are almost always liberals – a category of political actors who, by definition, advocate for non-elite groups.

10. Invoking the Christian God: This is similar to othering and populism. With morality politics, the idea is to declare yourself and your allies as patriots, Christians and “real Americans” (those are inseparable categories in this line of thinking) and anyone who challenges them as not. Basically, God loves Fox and Republicans and America. And hates taxes and anyone who doesn’t love those other three things. Because the speaker has been benedicted by God to speak on behalf of all Americans, any challenge is perceived as immoral. It’s a cheap and easy technique used by all totalitarian entities from states to cults.

11. Saturation: There are three components to effective saturation: being repetitive, being ubiquitous and being consistent. The message must be repeated cover and over, it must be everywhere and it must be shared across commentators: e.g. “Saddam has WMD.” Veracity and hard data have no relationship to the efficacy of saturation. There is a psychological effect of being exposed to the same message over and over, regardless of whether it’s true or if it even makes sense, e.g., “Barack Obama wasn’t born in the United States.” If something is said enough times, by enough people, many will come to accept it as truth. Another example is Fox’s own slogan of “Fair and Balanced.”

12. Disparaging Education: There is an emerging and disturbing lack of reverence for education and intellectualism in many Mainstream Media discourses. In fact, in some circles (e.g. Fox), higher education is often disparaged as elitist. Having a university credential is perceived by these folks as not a sign of credibility, but of a lack of it. In fact, among some commentators, evidence of intellectual prowess is treated snidely and as anti-American. The disdain for education and other evidence of being trained in critical thinking are direct threats to a hive-mind mentality, which is why they are so viscerally demeaned.

13. Guilt by Association: This is a favorite of Glenn Beck and Andrew Breitbart, both of whom have used it to decimate the careers and lives of many good people. Here’s how it works: if your cousin’s college roommate’s uncle’s ex-wife attended a dinner party back in 1984 with Gorbachev’s niece’s ex-boyfriend’s sister, then you, by extension are a communist set on destroying America. Period.

14. Diversion: This is where, when on the ropes, the media commentator suddenly takes the debate in a weird but predictable direction to avoid accountability. This is the point in the discussion where most Fox anchors start comparing the opponent to Saul Alinsky or invoking ACORN or Media Matters, in a desperate attempt to win through guilt by association. Or they’ll talk about wanting to focus on “moving forward,” as though by analyzing the current state of things or God forbid, how we got to this state of things, you have no regard for the future. Any attempt to bring the discussion back to the issue at hand will likely be called deflection, an ironic use of the technique of projection/flipping.

In debating some of these tactics with colleagues and friends, I have also noticed that the Fox viewership seems to be marked by a sort of collective personality disorder whereby the viewer feels almost as though they’ve been let into a secret society. Something about their affiliation with the network makes them feel privileged and this affinity is likely what drives the viewers to defend the network so vehemently. They seem to identify with it at a core level, because it tells them they are special and privy to something the rest of us don’t have. It’s akin to the loyalty one feels by being let into a private club or a gang. That effect is also likely to make the propagands more powerful, because it goes mostly unquestioned.

In considering these tactics and their possible effects on American public discourse, it is important to note that historically, those who’ve genuinely accessed truth have never berated those who did not. You don’t get honored by history when you beat up your opponent: look at Martin Luther King Jr., Robert Kennedy, Abraham Lincoln. These men did not find the need to engage in othering, ad homeinum attacks, guilt by association or bullying. This is because when a person has accessed a truth, they are not threatened by the opposing views of others. This reality reveals the righteous indignation of people like Glenn Beck, Bill O’Reilly and Sean Hannity as a symptom of untruth. These individuals are hostile and angry precisely because they don’t feel confident in their own veracity. And in general, the more someone is losing their temper in a debate and the more intolerant they are of listening to others, the more you can be certain they do not know what they’re talking about.

One final observation. Fox audiences, birthers and Tea Partiers often defend their arguments by pointing to the fact that a lot of people share the same perceptions. This is a reasonable point to the extent that Murdoch’s News Corporation reaches a far larger audience than any other single media outlet. But, the fact that a lot of people believe something is not necessarily a sign that it’s true; it’s just a sign that it’s been effectively marketed.

As honest, fair and truly intellectual debate degrades before the eyes of the global media audience, the quality of American democracy degrades along with it.

Dr. Cynthia Boaz is assistant professor of political science at Sonoma State University, where her areas of expertise include quality of democracy, nonviolent struggle, civil resistance and political communication and media. She is also an affiliated scholar at the UNESCO Chair of Philosophy for Peace International Master in Peace, Conflict, and Development Studies at Universitat Jaume I in Castellon, Spain. Additionally, she is an analyst and consultant on nonviolent action, with special emphasis on the Iran and Burma cases. She is vice president of the Metta Center for Nonviolence and on the board of Project Censored and the Media Freedom Foundation. Dr. Boaz is also a contributing writer and adviser to Truthout.org and associate editor of Peace and Change Journal.

Why Do People Believe Stupid Stuff, Even When They’re Confronted With the Truth?

by David McRaney, AlterNet

The Misconception: When your beliefs are challenged with facts, you alter your opinions and incorporate the new information into your thinking.

The Truth: When your deepest convictions are challenged by contradictory evidence, your beliefs get stronger.

Wired, The New York Times, Backyard Poultry Magazine – they all do it. Sometimes, they screw up and get the facts wrong. In ink or in electrons, a reputable news source takes the time to say “my bad.”

If you are in the news business and want to maintain your reputation for accuracy, you publish corrections. For most topics this works just fine, but what most news organizations don’t realize is a correction can further push readers away from the facts if the issue at hand is close to the heart. In fact, those pithy blurbs hidden on a deep page in every newspaper point to one of the most powerful forces shaping the way you think, feel and decide – a behavior keeping you from accepting the truth.

In 2006, Brendan Nyhan and Jason Reifler at The University of Michigan and Georgia State University created fake newspaper articles about polarizing political issues. The articles were written in a way which would confirm a widespread misconception about certain ideas in American politics. As soon as a person read a fake article, researchers then handed over a true article which corrected the first. For instance, one article suggested the United States found weapons of mass destruction in Iraq. The next said the U.S. never found them, which was the truth. Those opposed to the war or who had strong liberal leanings tended to disagree with the original article and accept the second. Those who supported the war and leaned more toward the conservative camp tended to agree with the first article and strongly disagree with the second. These reactions shouldn’t surprise you. What should give you pause though is how conservatives felt about the correction. After reading that there were no WMDs, they reported being even more certain than before there actually were WMDs and their original beliefs were correct.

They repeated the experiment with other wedge issues like stem cell research and tax reform, and once again, they found corrections tended to increase the strength of the participants’ misconceptions if those corrections contradicted their ideologies. People on opposing sides of the political spectrum read the same articles and then the same corrections, and when new evidence was interpreted as threatening to their beliefs, they doubled down. The corrections backfired.

Once something is added to your collection of beliefs, you protect it from harm. You do it instinctively and unconsciously when confronted with attitude-inconsistent information. Just as confirmation bias shields you when you actively seek information, the backfire effect defends you when the information seeks you, when it blindsides you. Coming or going, you stick to your beliefs instead of questioning them. When someone tries to correct you, tries to dilute your misconceptions, it backfires and strengthens them instead. Over time, the backfire effect helps make you less skeptical of those things which allow you to continue seeing your beliefs and attitudes as true and proper.

In 1976, when Ronald Reagan was running for president of the United States, he often told a story about a Chicago woman who was scamming the welfare system to earn her income.

Reagan said the woman had 80 names, 30 addresses and 12 Social Security cards which she used to get food stamps along with more than her share of money from Medicaid and other welfare entitlements. He said she drove a Cadillac, didn’t work and didn’t pay taxes. He talked about this woman, who he never named, in just about every small town he visited, and it tended to infuriate his audiences. The story solidified the term “Welfare Queen” in American political discourse and influenced not only the national conversation for the next 30 years, but public policy as well. It also wasn’t true.

Sure, there have always been people who scam the government, but no one who fit Reagan’s description ever existed. The woman most historians believe Reagan’s anecdote was based on was a con artist with four aliases who moved from place to place wearing disguises, not some stay-at-home mom surrounded by mewling children.

Despite the debunking and the passage of time, the story is still alive. The imaginary lady who Scrooge McDives into a vault of foodstamps between naps while hardworking Americans struggle down the street still appears every day on the Internet. The memetic staying power of the narrative is impressive. Some version of it continues to turn up every week in stories and blog posts about entitlements even though the truth is a click away.

Psychologists call stories like these narrative scripts, stories that tell you what you want to hear, stories which confirm your beliefs and give you permission to continue feeling as you already do. If believing in welfare queens protects your ideology, you accept it and move on. You might find Reagan’s anecdote repugnant or risible, but you’ve accepted without question a similar anecdote about pharmaceutical companies blocking research, or unwarranted police searches, or the health benefits of chocolate. You’ve watched a documentary about the evils of…something you disliked, and you probably loved it. For every Michael Moore documentary passed around as the truth there is an anti-Michael Moore counter documentary with its own proponents trying to convince you their version of the truth is the better choice.

A great example of selective skepticism is the website literallyunbelievable.org. They collect Facebook comments of people who believe articles from the satire newspaper The Onion are real. Articles about Oprah offering a select few the chance to be buried with her in an ornate tomb, or the construction of a multi-billion dollar abortion supercenter, or NASCAR awarding money to drivers who make homophobic remarks are all commented on with the same sort of “yeah, that figures” outrage. As the psychologist Thomas Gilovich said, “”When examining evidence relevant to a given belief, people are inclined to see what they expect to see, and conclude what they expect to conclude…for desired conclusions, we ask ourselves, ‘Can I believe this?,’ but for unpalatable conclusions we ask, ‘Must I believe this?’”

This is why hardcore doubters who believe Barack Obama was not born in the United States will never be satisfied with any amount of evidence put forth suggesting otherwise. When the Obama administration released his long-form birth certificate in April of 2011, the reaction from birthers was as the backfire effect predicts. They scrutinized the timing, the appearance, the format – they gathered together online and mocked it. They became even more certain of their beliefs than before. The same has been and will forever be true for any conspiracy theory or fringe belief. Contradictory evidence strengthens the position of the believer. It is seen as part of the conspiracy, and missing evidence is dismissed as part of the coverup.

This helps explain how strange, ancient and kooky beliefs resist science, reason and reportage. It goes deeper though, because you don’t see yourself as a kook. You don’t think thunder is a deity going for a 7-10 split. You don’t need special underwear to shield your libido from the gaze of the moon. Your beliefs are rational, logical and fact-based, right?

Well…consider a topic like spanking. Is it right or wrong? Is it harmless or harmful? Is it lazy parenting or tough love? Science has an answer, but let’s get to that later. For now, savor your emotional reaction to the issue and realize you are willing to be swayed, willing to be edified on a great many things, but you keep a special set of topics separate.

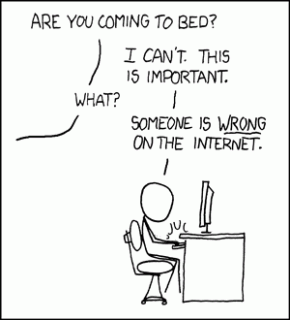

The last time you got into, or sat on the sidelines of, an argument online with someone who thought they knew all there was to know about health care reform, gun control, gay marriage, climate change, sex education, the drug war, Joss Whedon or whether or not 0.9999 repeated to infinity was equal to one – how did it go?

Did you teach the other party a valuable lesson? Did they thank you for edifying them on the intricacies of the issue after cursing their heretofore ignorance, doffing their virtual hat as they parted from the keyboard a better person?

No, probably not. Most online battles follow a similar pattern, each side launching attacks and pulling evidence from deep inside the web to back up their positions until, out of frustration, one party resorts to an all-out ad hominem nuclear strike. If you are lucky, the comment thread will get derailed in time for you to keep your dignity, or a neighboring commenter will help initiate a text-based dogpile on your opponent.

What should be evident from the studies on the backfire effect is you can never win an argument online. When you start to pull out facts and figures, hyperlinks and quotes, you are actually making the opponent feel as though they are even more sure of their position than before you started the debate. As they match your fervor, the same thing happens in your skull. The backfire effect pushes both of you deeper into your original beliefs.

Have you ever noticed the peculiar tendency you have to let praise pass through you, but feel crushed by criticism? A thousand positive remarks can slip by unnoticed, but one “you suck” can linger in your head for days. One hypothesis as to why this and the backfire effect happens is that you spend much more time considering information you disagree with than you do information you accept. Information which lines up with what you already believe passes through the mind like a vapor, but when you come across something which threatens your beliefs, something which conflicts with your preconceived notions of how the world works, you seize up and take notice. Some psychologists speculate there is an evolutionary explanation. Your ancestors paid more attention and spent more time thinking about negative stimuli than positive because bad things required a response. Those who failed to address negative stimuli failed to keep breathing.

In 1992, Peter Ditto and David Lopez conducted a study in which subjects dipped little strips of paper into cups filled with saliva. The paper wasn’t special, but the psychologists told half the subjects the strips would turn green if he or she had a terrible pancreatic disorder and told the other half it would turn green if they were free and clear. For both groups, they said the reaction would take about 20 seconds. The people who were told the strip would turn green if they were safe tended to wait much longer to see the results, far past the time they were told it would take. When it didn’t change colors, 52 percent retested themselves. The other group, the ones for whom a green strip would be very bad news, tended to wait the 20 seconds and move on. Only 18 percent retested.

When you read a negative comment, when someone shits on what you love, when your beliefs are challenged, you pore over the data, picking it apart, searching for weakness. The cognitive dissonance locks up the gears of your mind until you deal with it. In the process you form more neural connections, build new memories and put out effort – once you finally move on, your original convictions are stronger than ever.

When our bathroom scale delivers bad news, we hop off and then on again, just to make sure we didn’t misread the display or put too much pressure on one foot. When our scale delivers good news, we smile and head for the shower. By uncritically accepting evidence when it pleases us, and insisting on more when it doesn’t, we subtly tip the scales in our favor.

– Psychologist Dan Gilbert in The New York Times

The backfire effect is constantly shaping your beliefs and memory, keeping you consistently leaning one way or the other through a process psychologists call biased assimilation. Decades of research into a variety of cognitive biases shows you tend to see the world through thick, horn-rimmed glasses forged of belief and smudged with attitudes and ideologies. When scientists had people watch Bob Dole debate Bill Clinton in 1996, they found supporters before the debate tended to believe their preferred candidate won. In 2000, when psychologists studied Clinton lovers and haters throughout the Lewinsky scandal, they found Clinton lovers tended to see Lewinsky as an untrustworthy homewrecker and found it difficult to believe Clinton lied under oath. The haters, of course, felt quite the opposite. Flash forward to 2011, and you have Fox News and MSNBC battling for cable journalism territory, both promising a viewpoint which will never challenge the beliefs of a certain portion of the audience. Biased assimilation guaranteed.

Biased assimilation doesn’t only happen in the presence of current events. Michael Hulsizer of Webster University, Geoffrey Munro at Towson, Angela Fagerlin at the University of Michigan, and Stuart Taylor at Kent State conducted a study in 2004 in which they asked liberals and conservatives to opine on the 1970 shootings at Kent State where National Guard soldiers fired on Vietnam War demonstrators killing four and injuring nine.

As with any historical event, the details of what happened at Kent State began to blur within hours. In the years since, books and articles and documentaries and songs have plotted a dense map of causes and motivations, conclusions and suppositions with points of interest in every quadrant. In the weeks immediately after the shooting, psychologists surveyed the students at Kent State who witnessed the event and found that 6 percent of the liberals and 45 percent of the conservatives thought the National Guard was provoked. Twenty-five years later, they asked current students what they thought. In 1995, 62 percent of liberals said the soldiers committed murder, but only 37 percent of conservatives agreed. Five years later, they asked the students again and found conservatives were still more likely to believe the protesters overran the National Guard while liberals were more likely to see the soldiers as the aggressors. What is astonishing, is they found the beliefs were stronger the more the participants said they knew about the event. The bias for the National Guard or the protesters was stronger the more knowledgeable the subject. The people who only had a basic understanding experienced a weak backfire effect when considering the evidence. The backfire effect pushed those who had put more thought into the matter farther from the gray areas.

Geoffrey Munro at the University of California and Peter Ditto at Kent State University concocted a series of fake scientific studies in 1997. One set of studies said homosexuality was probably a mental illness. The other set suggested homosexuality was normal and natural. They then separated subjects into two groups; one group said they believed homosexuality was a mental illness and one did not. Each group then read the fake studies full of pretend facts and figures suggesting their worldview was wrong. On either side of the issue, after reading studies which did not support their beliefs, most people didn’t report an epiphany, a realization they’ve been wrong all these years. Instead, they said the issue was something science couldn’t understand. When asked about other topics later on, like spanking or astrology, these same people said they no longer trusted research to determine the truth. Rather than shed their belief and face facts, they rejected science altogether.

The human understanding when it has once adopted an opinion draws all things else to support and agree with it. And though there be a greater number and weight of instances to be found on the other side, yet these it either neglects and despises, or else-by some distinction sets aside and rejects, in order that by this great and pernicious predetermination the authority of its former conclusion may remain inviolate

– Francis Bacon

Science and fiction once imagined the future in which you now live. Books and films and graphic novels of yore featured cyberpunks surfing data streams and personal communicators joining a chorus of beeps and tones all around you. Short stories and late-night pocket-protected gabfests portended a time when the combined knowledge and artistic output of your entire species would be instantly available at your command, and billions of human lives would be connected and visible to all who wished to be seen.

So, here you are, in the future surrounded by computers which can deliver to you just about every fact humans know, the instructions for any task, the steps to any skill, the explanation for every single thing your species has figured out so far. This once imaginary place is now your daily life.

So, if the future we were promised is now here, why isn’t it the ultimate triumph of science and reason? Why don’t you live in a social and political technotopia, an empirical nirvana, an Asgard of analytical thought minus the jumpsuits and neon headbands where the truth is known to all?

Among the many biases and delusions in between you and your microprocessor-rich, skinny-jeaned Arcadia is a great big psychological beast called the backfire effect. It’s always been there, meddling with the way you and your ancestors understood the world, but the Internet unchained its potential, elevated its expression, and you’ve been none the wiser for years.

As social media and advertising progresses, confirmation bias and the backfire effect will become more and more difficult to overcome. You will have more opportunities to pick and choose the kind of information which gets into your head along with the kinds of outlets you trust to give you that information. In addition, advertisers will continue to adapt, not only generating ads based on what they know about you, but creating advertising strategies on the fly based on what has and has not worked on you so far. The media of the future may be delivered based not only on your preferences, but on how you vote, where you grew up, your mood, the time of day or year – every element of you which can be quantified. In a world where everything comes to you on demand, your beliefs may never be challenged.

Three thousand spoilers per second rippled away from Twitter in the hours before Barack Obama walked up to his presidential lectern and told the world Osama bin Laden was dead.

Novelty Facebook pages, get-rich-quick websites and millions of emails, texts and instant messages related to the event preceded the official announcement on May 1, 2011. Stories went up, comments poured in, search engines burned white hot. Between 7:30 and 8:30 p.m. on the first day, Google searches for bin Laden saw a 1 million percent increase from the number the day before. Youtube videos of Toby Keith and Lee Greenwood started trending. Unprepared news sites sputtered and strained to deliver up page after page of updates to a ravenous public.

It was a dazzling display of how much the world of information exchange changed in the years since September of 2001 except in one predictable and probably immutable way. Within minutes of learning about Seal Team Six, the headshot tweeted around the world and the swift burial at sea, conspiracy theories began to bounce against the walls of our infinitely voluminous echo chamber. Days later, when the world learned they would be denied photographic proof, the conspiracy theories grew legs, left the ocean and evolved into self-sustaining undebunkable life forms.

As information technology progresses, the behaviors you are most likely to engage in when it comes to belief, dogma, politics and ideology seem to remain fixed. In a world blossoming with new knowledge, burgeoning with scientific insights into every element of the human experience, like most people, you still pick and choose what to accept even when it comes out of a lab and is based on 100 years of research.

So, how about spanking? After reading all of this, do you think you are ready to know what science has to say about the issue? Here’s the skinny – psychologists are still studying the matter, but the current thinking says spanking generates compliance in children under seven if done infrequently, in private and using only the hands. Now, here’s a slight correction: other methods of behavior modification like positive reinforcement, token economies, time out and so on are also quite effective and don’t require any violence.

Reading those words, you probably had a strong emotional response. Now that you know the truth, have your opinions changed?

Source: www.alternet.org

This story is cross-posted from You Are Not So Smart.

Check out a copy of the book “You Are Not So Smart.”

Debbie Menon is an independent writer based in Dubai. Her main focus are the US-Mid- East Conflicts. Her writing has been featured in many print and online publications.

Her writing reflects the incredible resilience, almost superhuman steadfastness of the occupied and oppressed Palestinians, who are now facing the prospect of a final round of ethnic cleansing. She is committed to exposing Israel’s Lobbies’ control of ‘U.S. Middle East Policy. Control’ which amounts to treason by the Zionist lobbies in America and its stooges in Congress, and that guarantees there can never be a peaceful resolution of the Israeli-Palestinian conflict, only catastrophe for all, in the region and the world.

Her mission is to inform and educate internet viewers seeking unfiltered information about real events on issues of the US/Middle East conflicts that are unreported, underreported, or distorted in the American media. PS: For those of her detractors that think she is being selective and even “one-sided,” tough, that is the point of her work, to present an alternative view and interpretation of the US-Israel-Middle East conflict, that has been completely ignored in mainstream discourse.

The purpose is to look at the current reality from a different and critical perspective, not to simply rehash the pro-US/Israel perspective, smoke and mirrors that has been allowed to utterly and completely dominate Mainstream discourse.

ATTENTION READERS

We See The World From All Sides and Want YOU To Be Fully InformedIn fact, intentional disinformation is a disgraceful scourge in media today. So to assuage any possible errant incorrect information posted herein, we strongly encourage you to seek corroboration from other non-VT sources before forming an educated opinion.

About VT - Policies & Disclosures - Comment Policy